With graphics cards finally back in stock, I've put together this comprehensive GPU performance hierarchy of the past two (now starting on three) generations of graphics cards. These are the best graphics cards around, but it's also important to know what you're getting. I've benchmarked all of these cards, at multiple resolutions and settings. Here's how they stack up across a test suite of 15 games.

I've also listed current GPU prices, which have improved substantially over the past few months. Most AMD graphics cards are now selling at or below their official MSRPs, and Nvidia's fastest GPUs (RTX 3080 Ti, RTX 3090, and RTX 3090 Ti) are also well below their MSRPs. The RTX 4090 and RTX 4080 have also launched, alongside the various Intel Arc GPUs. Here's the current list, updated regularly as new GPUs arrive.

GPU Benchmarks and Performance Hierarchy

Note: Click the column headings to sort the table!

| Graphics Card | Overall Performance | 4K Ultra | 1440p Ultra | 1080p Ultra | 1080p Medium | Online Price (MSRP) | Specifications |

|---|---|---|---|---|---|---|---|

| GeForce RTX 4090 | 182.5 | 128.7 | 167.2 | 179.8 | 225.6 | $2,177 ($1,599) | AD102, 16384 shaders, 2520 MHz, 82.6 TFLOPS, 24GB GDDR6X@21Gbps, 1008GB/s, 450W |

| GeForce RTX 4080 | 162.9 | 100.7 | 148.2 | 167.9 | 217.0 | $1,249 ($1,199) | AD103, 9728 shaders, 2505 MHz, 48.7 TFLOPS, 16GB GDDR6X@22.4Gbps, 617GB/s, 320W |

| GeForce RTX 3090 Ti | 144.7 | 83.0 | 128.2 | 153.5 | 209.7 | $1,449 ($1,999) | GA102, 10752 shaders, 1860 MHz, 40.0 TFLOPS, 24GB GDDR6X@21Gbps, 1008GB/s, 450W |

| Radeon RX 6950 XT | 137.7 | 74.6 | 124.4 | 151.3 | 210.9 | $799 ($1,099) | Navi 21, 5120 shaders, 2310 MHz, 23.7 TFLOPS, 16GB GDDR6@18Gbps, 576GB/s, 335W |

| GeForce RTX 3090 | 136.0 | 75.1 | 119.2 | 145.5 | 205.2 | $1,298 ($1,499) | GA102, 10496 shaders, 1695 MHz, 35.6 TFLOPS, 24GB GDDR6X@19.5Gbps, 936GB/s, 350W |

| GeForce RTX 3080 Ti | 132.4 | 72.6 | 115.7 | 141.9 | 201.3 | $1,149 ($1,199) | GA102, 10240 shaders, 1665 MHz, 34.1 TFLOPS, 12GB GDDR6X@19Gbps, 912GB/s, 350W |

| GeForce RTX 3080 12GB | 129.0 | 70.1 | 112.5 | 138.4 | 198.3 | $899 (N/A) | GA102, 8960 shaders, 1845 MHz, 33.1 TFLOPS, 12GB GDDR6X@19Gbps, 912GB/s, 350W |

| Radeon RX 6900 XT | 127.6 | 66.9 | 113.4 | 142.2 | 203.2 | $669 ($999) | Navi 21, 5120 shaders, 2250 MHz, 23.0 TFLOPS, 16GB GDDR6@16Gbps, 512GB/s, 300W |

| GeForce RTX 3080 | 123.9 | 65.9 | 106.5 | 132.8 | 197.5 | $732 ($699) | GA102, 8704 shaders, 1710 MHz, 29.8 TFLOPS, 10GB GDDR6X@19Gbps, 760GB/s, 320W |

| Radeon RX 6800 XT | 120.8 | 62.1 | 107.2 | 135.1 | 196.5 | $597 ($649) | Navi 21, 4608 shaders, 2250 MHz, 20.7 TFLOPS, 16GB GDDR6@16Gbps, 512GB/s, 300W |

| Radeon RX 6800 | 108.0 | 53.5 | 93.8 | 120.6 | 187.6 | $499 ($579) | Navi 21, 3840 shaders, 2105 MHz, 16.2 TFLOPS, 16GB GDDR6@16Gbps, 512GB/s, 250W |

| GeForce RTX 3070 Ti | 106.0 | 50.6 | 91.0 | 117.4 | 182.0 | $549 ($599) | GA104, 6144 shaders, 1770 MHz, 21.7 TFLOPS, 8GB GDDR6X@19Gbps, 608GB/s, 290W |

| GeForce RTX 3070 | 100.2 | 46.6 | 85.3 | 112.2 | 176.0 | $529 ($499) | GA104, 5888 shaders, 1725 MHz, 20.3 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 220W |

| GeForce RTX 2080 Ti | 97.9 | 47.9 | 82.7 | 107.6 | 169.2 | $997 ($999) | TU102, 4352 shaders, 1545 MHz, 13.4 TFLOPS, 11GB GDDR6@14Gbps, 616GB/s, 250W |

| Radeon RX 6750 XT | 95.7 | 44.3 | 80.5 | 109.4 | 180.3 | $420 ($549) | Navi 22, 2560 shaders, 2600 MHz, 13.3 TFLOPS, 12GB GDDR6@18Gbps, 432GB/s, 250W |

| GeForce RTX 3060 Ti | 91.0 | 41.1 | 76.5 | 102.3 | 166.0 | $389 ($399) | GA104, 4864 shaders, 1665 MHz, 16.2 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 200W |

| Radeon RX 6700 XT | 90.2 | 41.6 | 75.5 | 103.4 | 171.3 | $379 ($489) | Navi 22, 2560 shaders, 2581 MHz, 13.2 TFLOPS, 12GB GDDR6@16Gbps, 384GB/s, 230W |

| GeForce RTX 2080 Super | 83.4 | 37.2 | 70.9 | 94.4 | 152.5 | $599 ($699) | TU104, 3072 shaders, 1815 MHz, 11.2 TFLOPS, 8GB GDDR6@15.5Gbps, 496GB/s, 250W |

| Radeon RX 6700 10GB | 81.3 | 35.1 | 68.0 | 95.2 | 158.0 | $329 (N/A) | Navi 22, 2304 shaders, 2450 MHz, 11.3 TFLOPS, 10GB GDDR6@16Gbps, 320GB/s, 175W |

| Intel Arc A770 16GB | 80.9 | 39.4 | 68.4 | 89.6 | 139.0 | $349 ($349) | ACM-G10, 4096 shaders, 2100 MHz, 17.2 TFLOPS, 16GB GDDR5@17.5Gbps, 560GB/s, 225W |

| GeForce RTX 2080 | 79.9 | 35.0 | 68.0 | 91.2 | 147.2 | $699 ($699) | TU104, 2944 shaders, 1710 MHz, 10.1 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 215W |

| Radeon RX 6650 XT | 73.7 | 32.2 | 60.4 | 86.0 | 147.7 | $299 ($399) | Navi 23, 2048 shaders, 2635 MHz, 10.8 TFLOPS, 8GB GDDR6@18Gbps, 280GB/s, 180W |

| GeForce RTX 2070 Super | 73.7 | 31.8 | 62.5 | 84.7 | 137.0 | $499 ($499) | TU104, 2560 shaders, 1770 MHz, 9.1 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 215W |

| Radeon RX 6600 XT | 72.0 | 31.4 | 58.4 | 84.0 | 145.6 | $279 ($399) | Navi 23, 2048 shaders, 2589 MHz, 10.6 TFLOPS, 8GB GDDR6@16Gbps, 256GB/s, 160W |

| Intel Arc A750 | 71.4 | 33.8 | 59.9 | 79.0 | 128.6 | $289 ($289) | ACM-G10, 3584 shaders, 2050 MHz, 14.7 TFLOPS, 8GB GDDR5@16Gbps, 512GB/s, 225W |

| GeForce RTX 3060 | 69.8 | 31.6 | 57.6 | 78.3 | 131.0 | $299 ($329) | GA106, 3584 shaders, 1777 MHz, 12.7 TFLOPS, 12GB GDDR6@15Gbps, 360GB/s, 170W |

| GeForce RTX 2070 | 65.5 | 28.2 | 55.6 | 75.3 | 122.1 | $499 ($399) | TU106, 2304 shaders, 1620 MHz, 7.5 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 175W |

| GeForce RTX 2060 Super | 62.2 | 26.4 | 52.5 | 72.0 | 116.8 | $331 ($399) | TU106, 2176 shaders, 1650 MHz, 7.2 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 175W |

| Radeon RX 5700 XT | 61.7 | 29.3 | 53.3 | 73.7 | 125.8 | $298 ($399) | Navi 10, 2560 shaders, 1905 MHz, 9.8 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 225W |

| Radeon RX 6600 | 60.8 | 25.9 | 49.0 | 71.6 | 125.4 | $209 ($329) | Navi 23, 1792 shaders, 2491 MHz, 8.9 TFLOPS, 8GB GDDR6@14Gbps, 224GB/s, 132W |

| Radeon RX 5700 | 54.4 | 25.7 | 47.2 | 64.8 | 111.3 | $298 ($349) | Navi 10, 2304 shaders, 1725 MHz, 7.9 TFLOPS, 8GB GDDR6@14Gbps, 448GB/s, 180W |

| GeForce RTX 2060 | 51.9 | 20.6 | 42.1 | 60.8 | 106.2 | $229 ($299) | TU106, 1920 shaders, 1680 MHz, 6.5 TFLOPS, 6GB GDDR6@14Gbps, 336GB/s, 160W |

| GeForce RTX 3050 | 50.6 | 22.3 | 41.1 | 57.1 | 98.1 | $249 ($249) | GA106, 2560 shaders, 1777 MHz, 9.1 TFLOPS, 8GB GDDR6@14Gbps, 224GB/s, 130W |

| Radeon RX 5600 XT | 48.5 | 22.5 | 42.0 | 58.1 | 100.6 | $279 ($279) | Navi 10, 2304 shaders, 1750 MHz, 8.1 TFLOPS, 8GB GDDR6@14Gbps, 336GB/s, 160W |

| GeForce GTX 1660 Super | 36.5 | 15.4 | 31.5 | 44.4 | 82.8 | $195 ($229) | TU116, 1408 shaders, 1785 MHz, 5.0 TFLOPS, 6GB GDDR6@14Gbps, 336GB/s, 125W |

| Radeon RX 5500 XT 8GB | 33.3 | 15.0 | 28.5 | 39.8 | 72.6 | $229 ($199) | Navi 14, 1408 shaders, 1845 MHz, 5.2 TFLOPS, 8GB GDDR6@14Gbps, 224GB/s, 130W |

| GeForce GTX 1660 | 32.9 | 13.7 | 28.5 | 39.9 | 75.1 | $199 ($219) | TU116, 1408 shaders, 1785 MHz, 5.0 TFLOPS, 6GB GDDR5@8Gbps, 192GB/s, 120W |

| Radeon RX 5500 XT 4GB | 27.9 | 11.5 | 23.3 | 33.5 | 66.9 | $149 ($179) | Navi 14, 1408 shaders, 1845 MHz, 5.2 TFLOPS, 4GB GDDR6@14Gbps, 224GB/s, 130W |

| GeForce GTX 1650 Super | 26.5 | 10.5 | 21.0 | 33.2 | 67.9 | $209 ($169) | TU116, 1280 shaders, 1725 MHz, 4.4 TFLOPS, 4GB GDDR6@12Gbps, 192GB/s, 100W |

| Radeon RX 6500 XT | 25.9 | 9.3 | 18.8 | 31.9 | 68.5 | $159 ($199) | Navi 24, 1024 shaders, 2815 MHz, 5.8 TFLOPS, 4GB GDDR6@18Gbps, 144GB/s, 107W |

| Intel Arc A380 | 25.8 | 9.4 | 21.2 | 31.0 | 58.7 | $139 ($139) | ACM-G11, 1024 shaders, 2000 MHz, 4.1 TFLOPS, 6GB GDDR5@15.5Gbps, 186GB/s, 75W |

| GeForce GTX 1650 | 20.0 | 7.4 | 15.8 | 26.6 | 51.1 | $156 ($159) | TU117, 896 shaders, 1665 MHz, 3.0 TFLOPS, 4GB GDDR5@8Gbps, 128GB/s, 75W |

| Radeon RX 6400 | 20.0 | 7.2 | 14.5 | 24.5 | 52.7 | $139 ($159) | Navi 24, 768 shaders, 2321 MHz, 3.6 TFLOPS, 4GB GDDR6@16Gbps, 128GB/s, 53W |

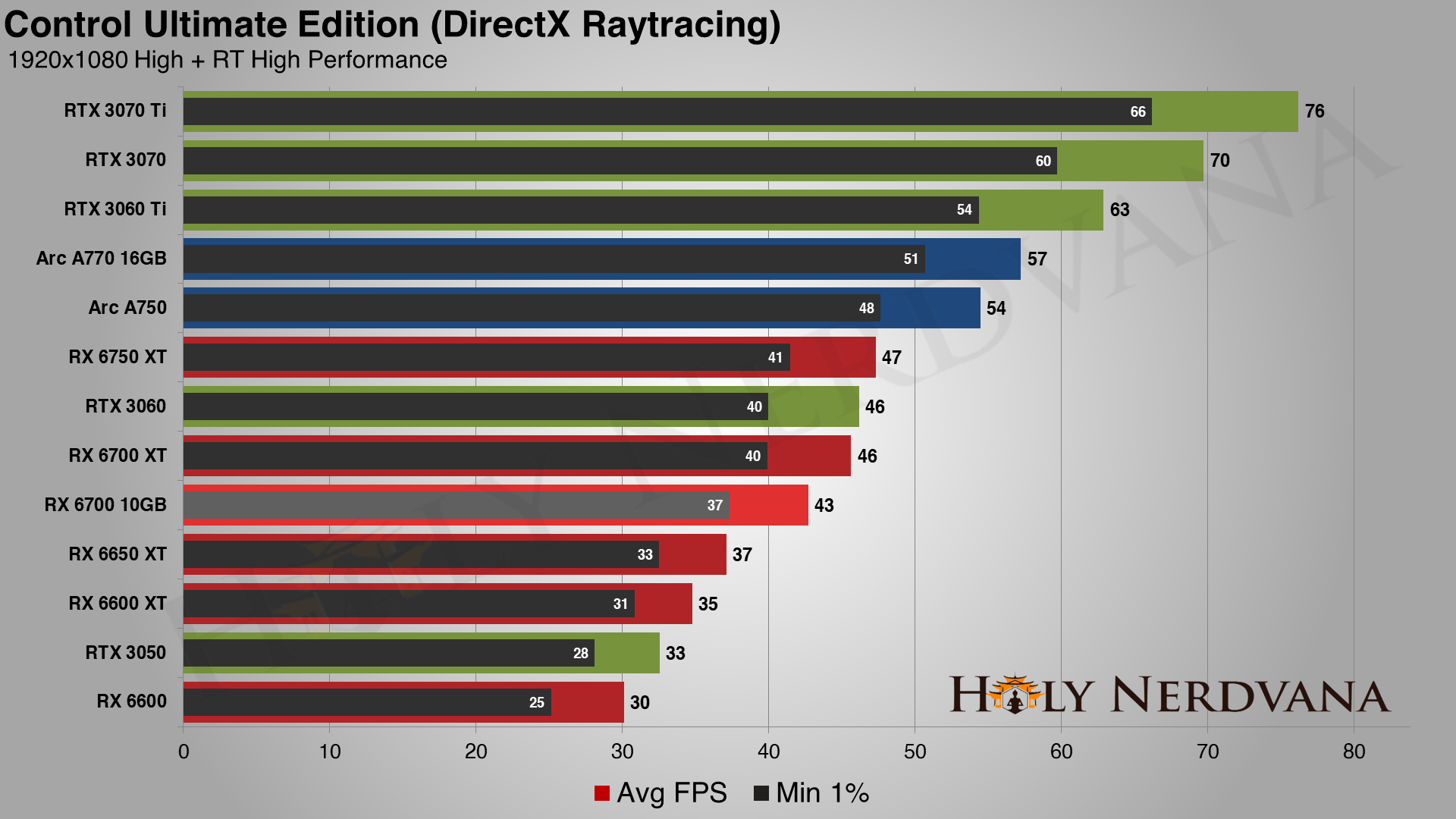

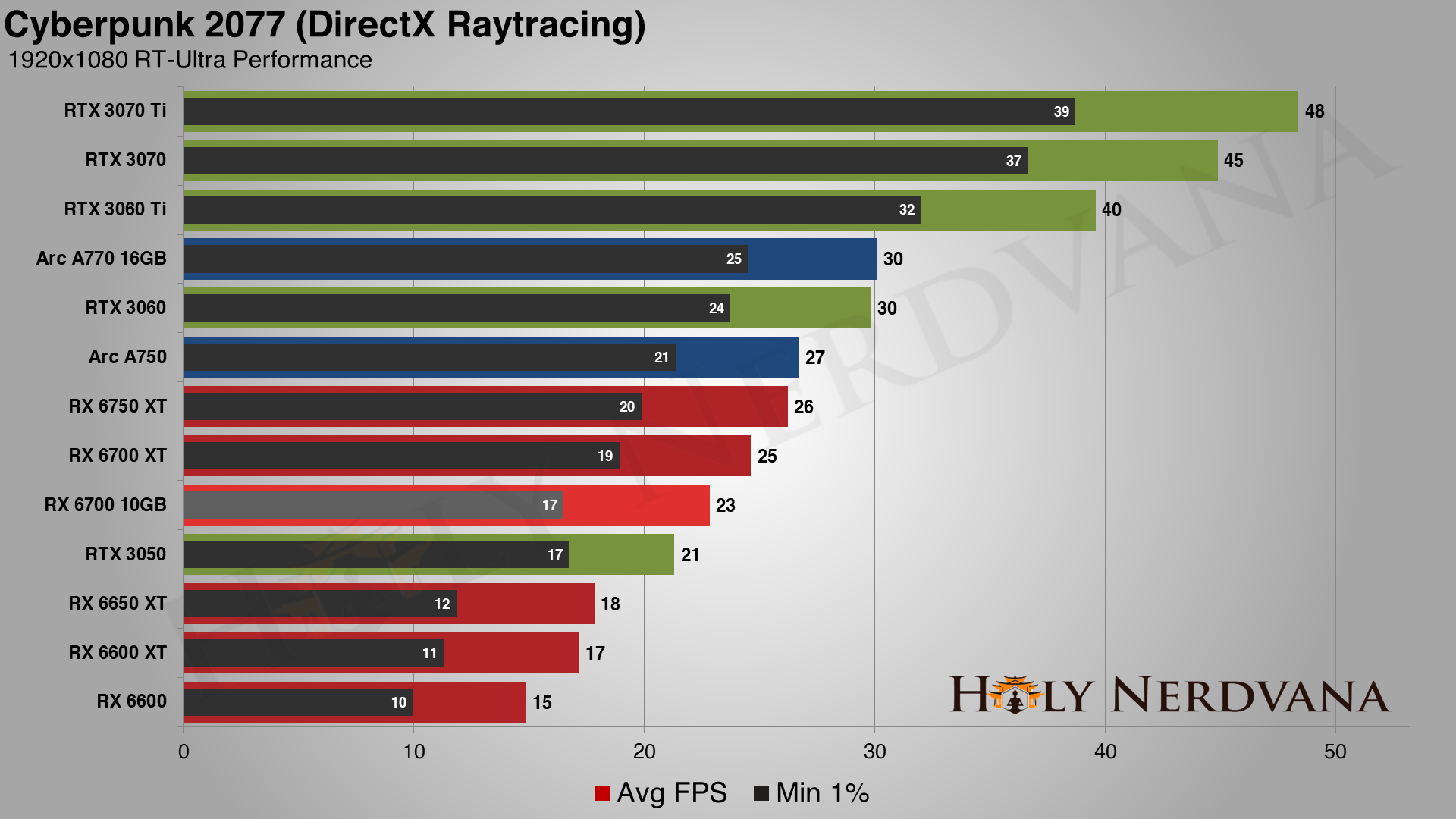

If you're looking at those numbers and wondering where they come from, they're a composite score generated by using the geometric mean of testing conducted at 1080p, 1440p, and 4K. Ray tracing hardware factors into the scores as well, which explains why Nvidia's RTX GPUs often outclass their AMD counterparts, and the same mostly goes for Intel Arc GPUs.

Prices are not a factor in the above rankings, but I've linked to Amazon in the first column — not all GPUs are available there, or in stock. Obviously, previous generation parts tend to be far less desirable, as prices have not dropped nearly enough relative to the performance on tap. Note that I do get Amazon affiliate compensation if you use my links.

With the overall performance rankings out of the way, I've also selected my top four picks for the enthusiast, high-end, mid-range, and budget markets. Here's where price becomes a much bigger consideration.

The Best Enthusiast Graphics Card

GeForce RTX 4090 24GB ($1,599)

Nvidia's GeForce RTX 4090 is the new ruler of the graphics card world, particularly for 4K gaming and especially if you want to play games with ray tracing effects. It's expensive and also sold out right now, but if you can nab one... damn, is it fast! You also get 24GB of memory, DLSS support — including the new DLSS 3 Frame Generation technology to further boost performance, especially in CPU limited scenarios — and improved efficiency. This is without question the top enthusiast graphics card right now.

The Best High-End Graphics Card

Picking the best high-end GPU is a bit more difficult. The RTX 3080, 3070, and 3060 Ti are all worth a thought, but overall I give the edge to the Radeon RX 6750 XT. It has more memory, it performs nearly as well as the Nvidia cards — better if you're not worried about ray tracing effects — and it's reasonably priced starting at $449. You can also opt for the RX 6700 XT, which is only a few percent slower and costs about $50 less (for however long it remains in stock).

The Best Mainstream Graphics Card

There's little question about this one, as the RX 6600 delivers excellent performance and only costs $229 (sometimes less). Such prices were practically unheard of during the past two years for a GPU of this quality, but with the massive drop off in GPU mining profitability, there appears to be a growing surplus of AMD's Navi 23 parts. Our overall performance ranking puts it behind the RTX 3060, but that card costs about $100 more. Online prices are lower than even the RTX 3050, a card that's easily eclipsed by the RX 6600. This is arguably the best graphics card value right now. As a wild card alternative, you could also try to pick up an Intel Arc A750, which delivers superior performance and features... as long as the drivers don't bite you in the ass. Which they probably will.

The Best Budget Graphics Card

This is a tough one, as the Radeon RX 6500 XT has a lot of limitations. It doesn't have much memory, it's limited to a 4-lane PCIe interface, it only has two display outputs, and it doesn't even have video encoding hardware. The problem is that the next step up basically puts us right back at the $250 midrange price point. Given you can find the RX 6500 XT for just $159, and it competes with the GTX 1650 Super that costs $60 more, there just aren't many other worthwhile options for under $200. Intel's Arc A380 would be an option, but it's continually sold out — which probably has more to do with a lack of supply than high demand.

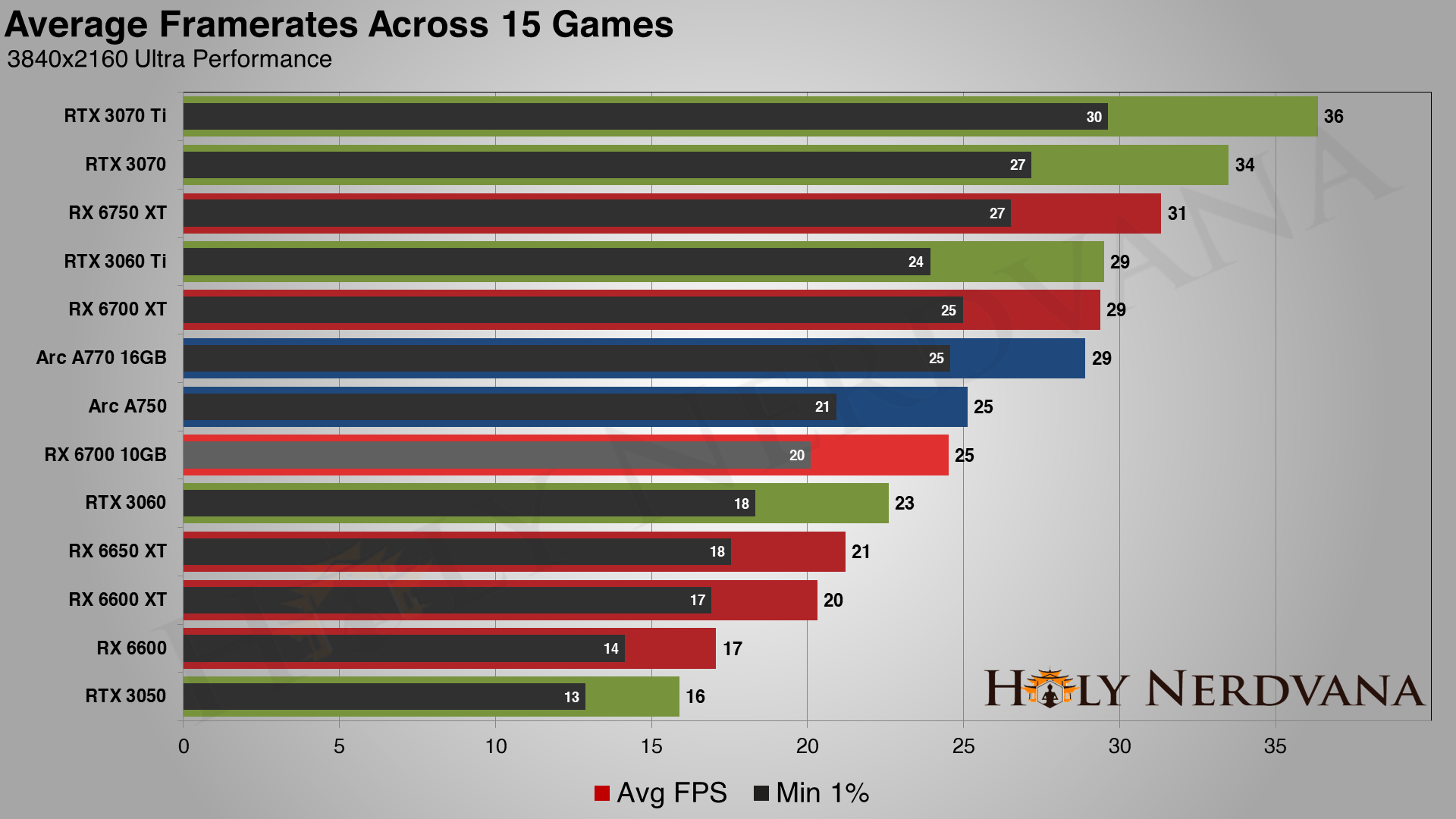

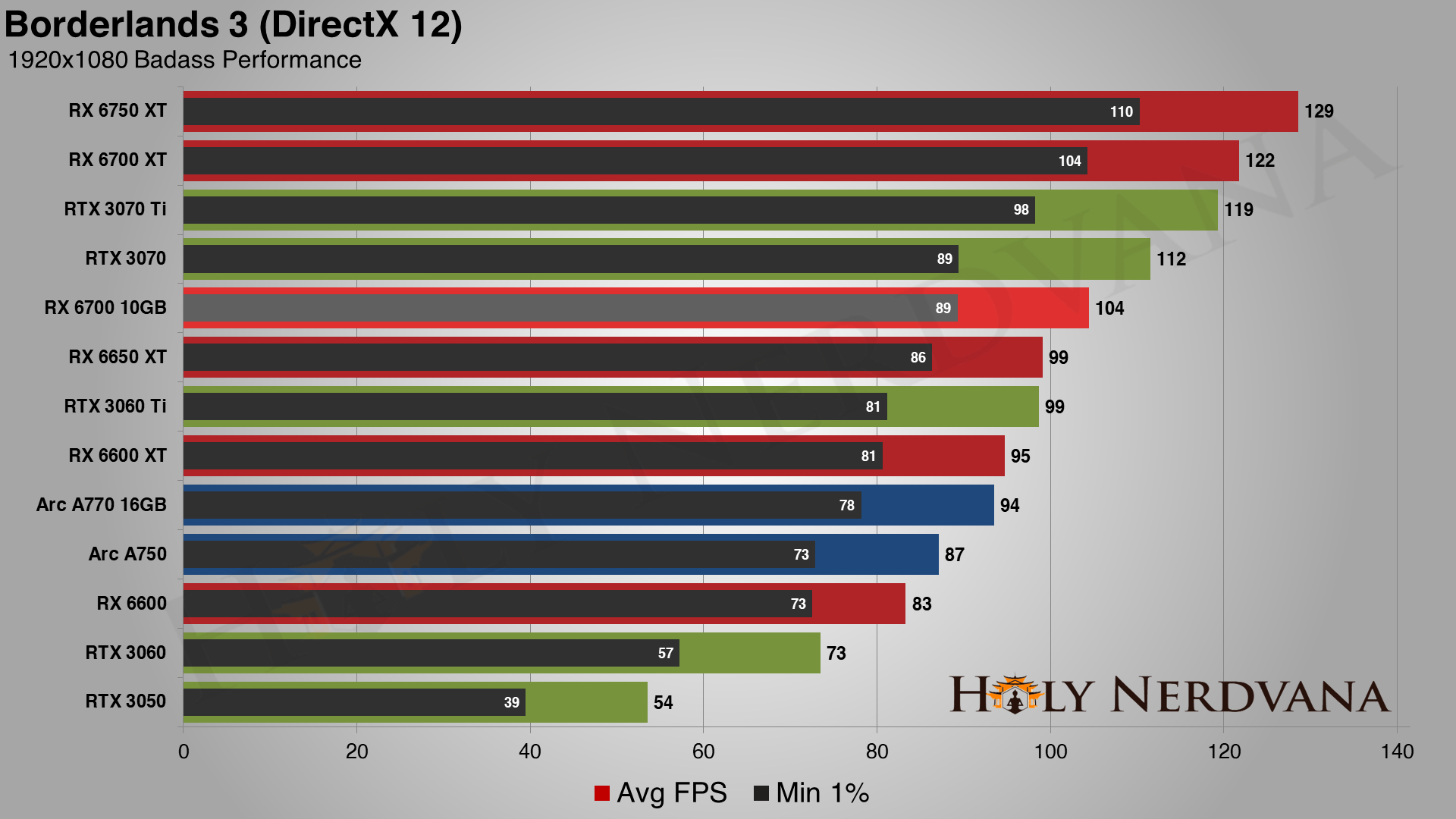

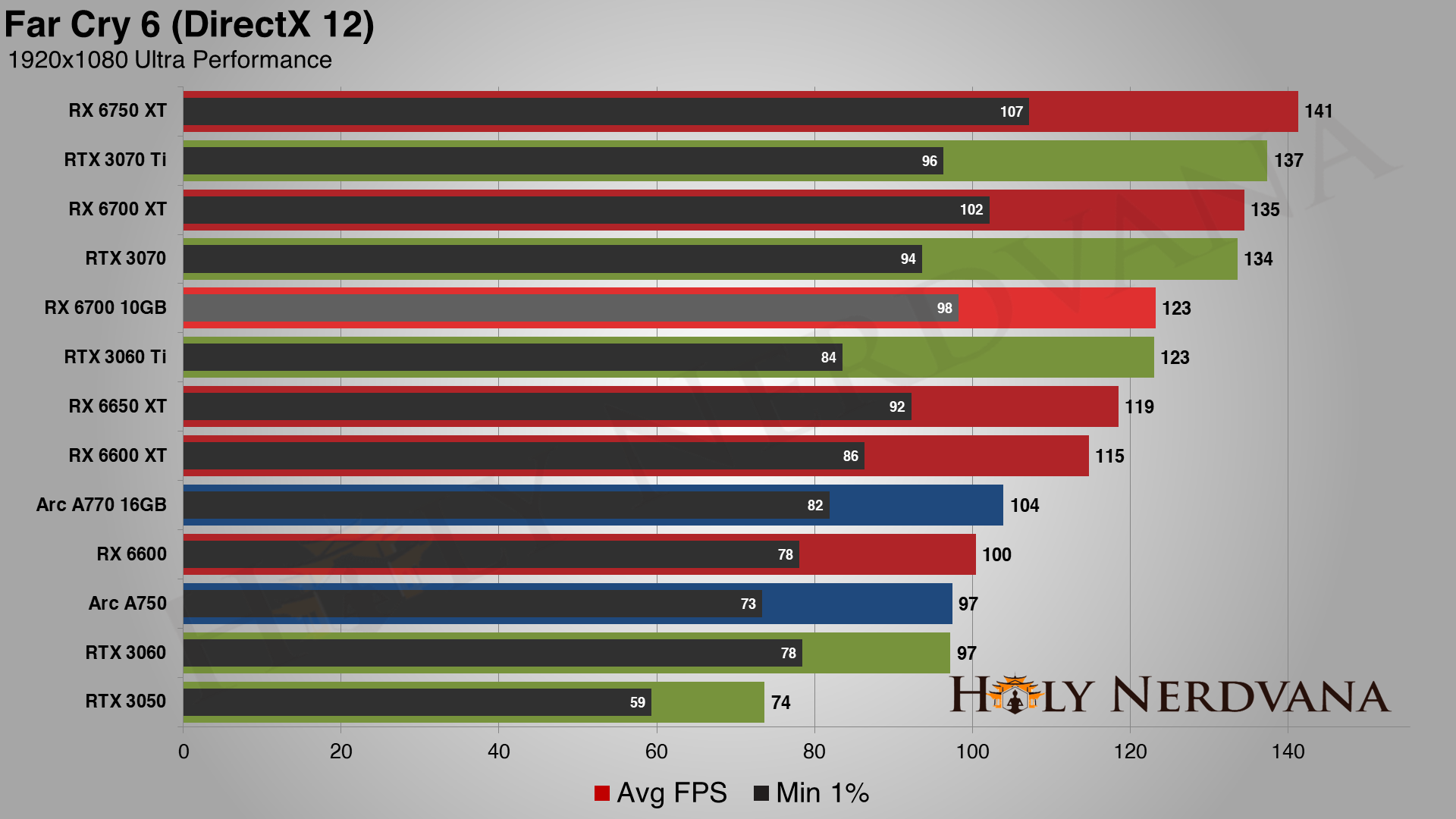

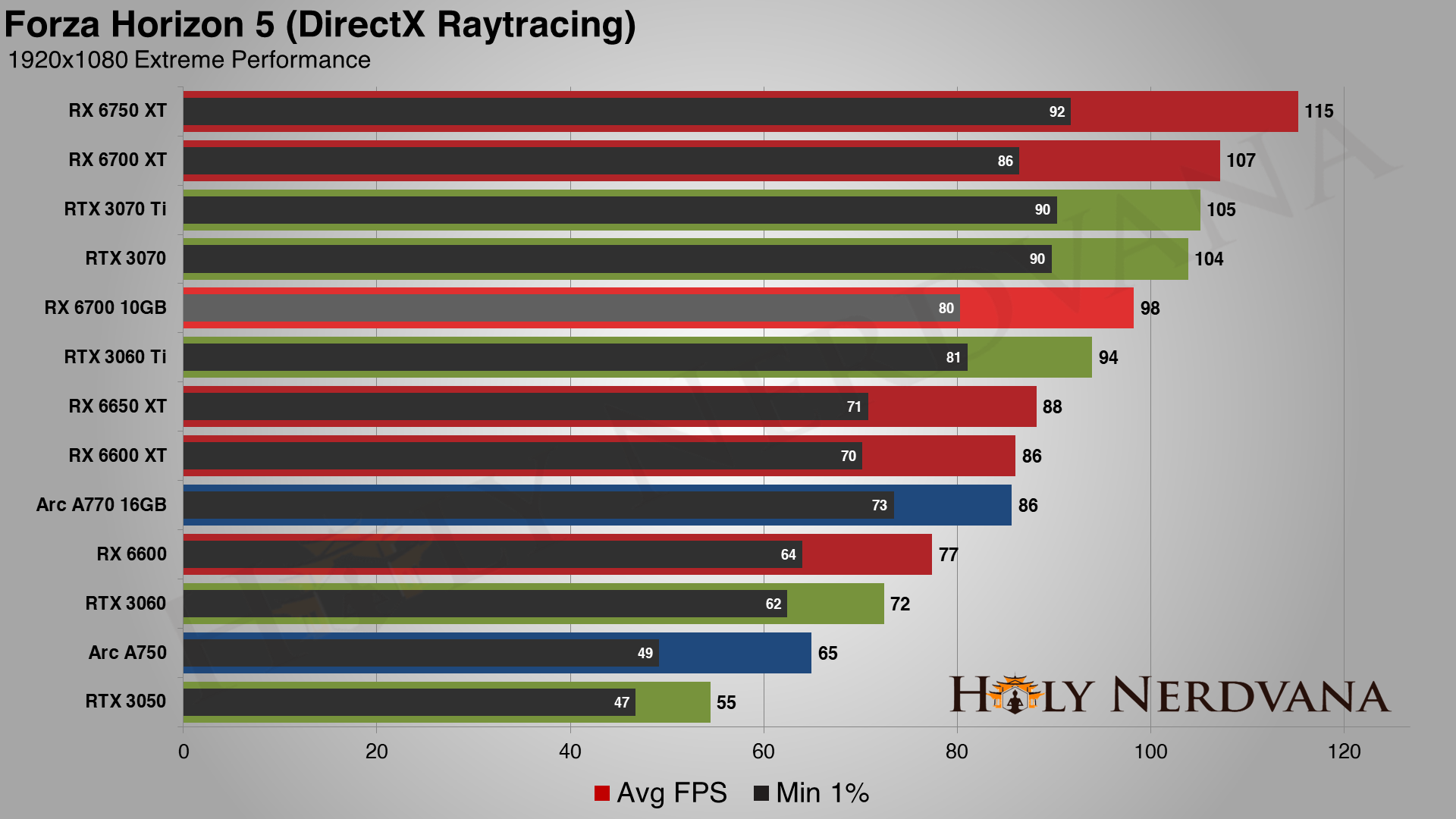

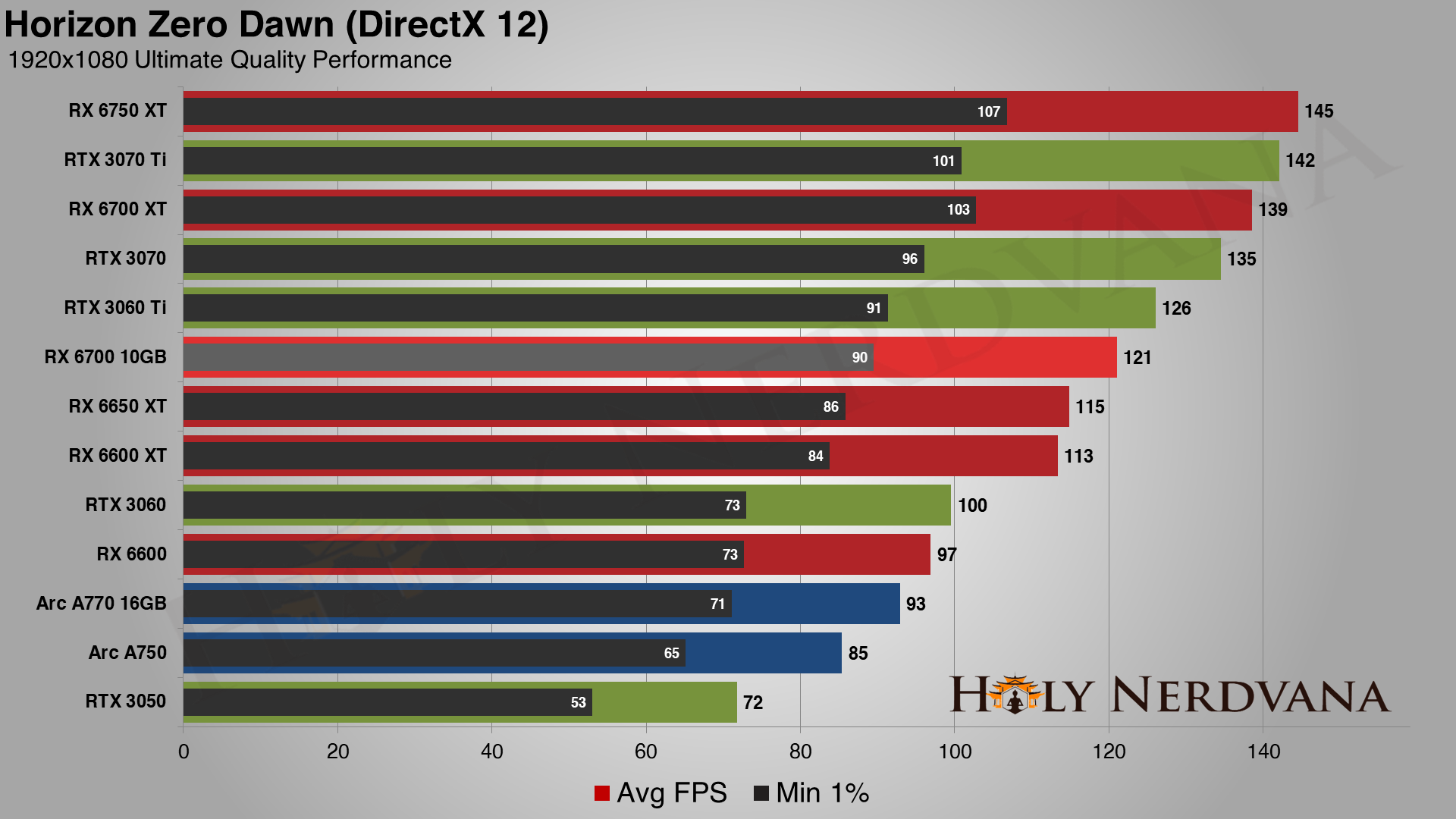

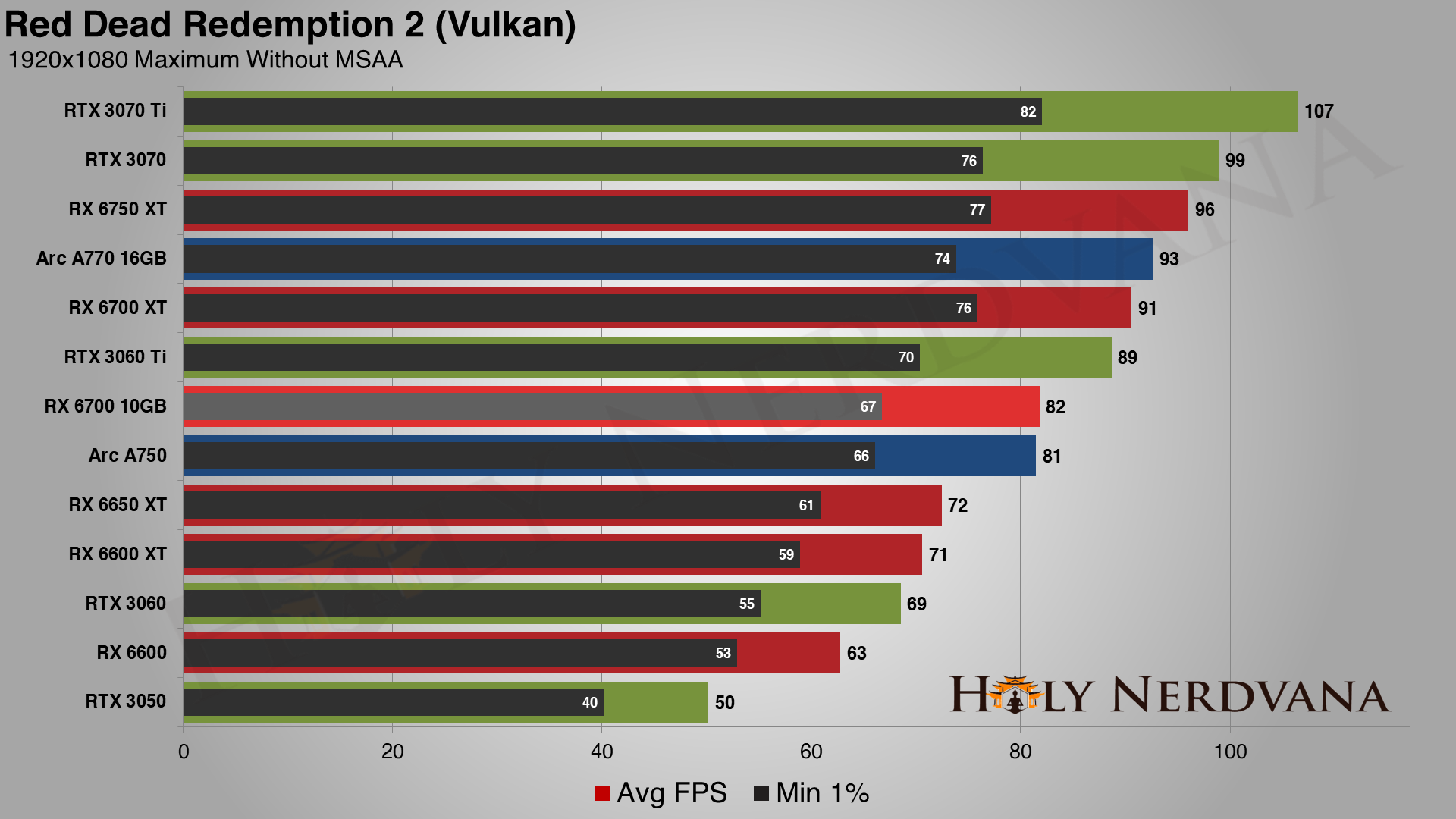

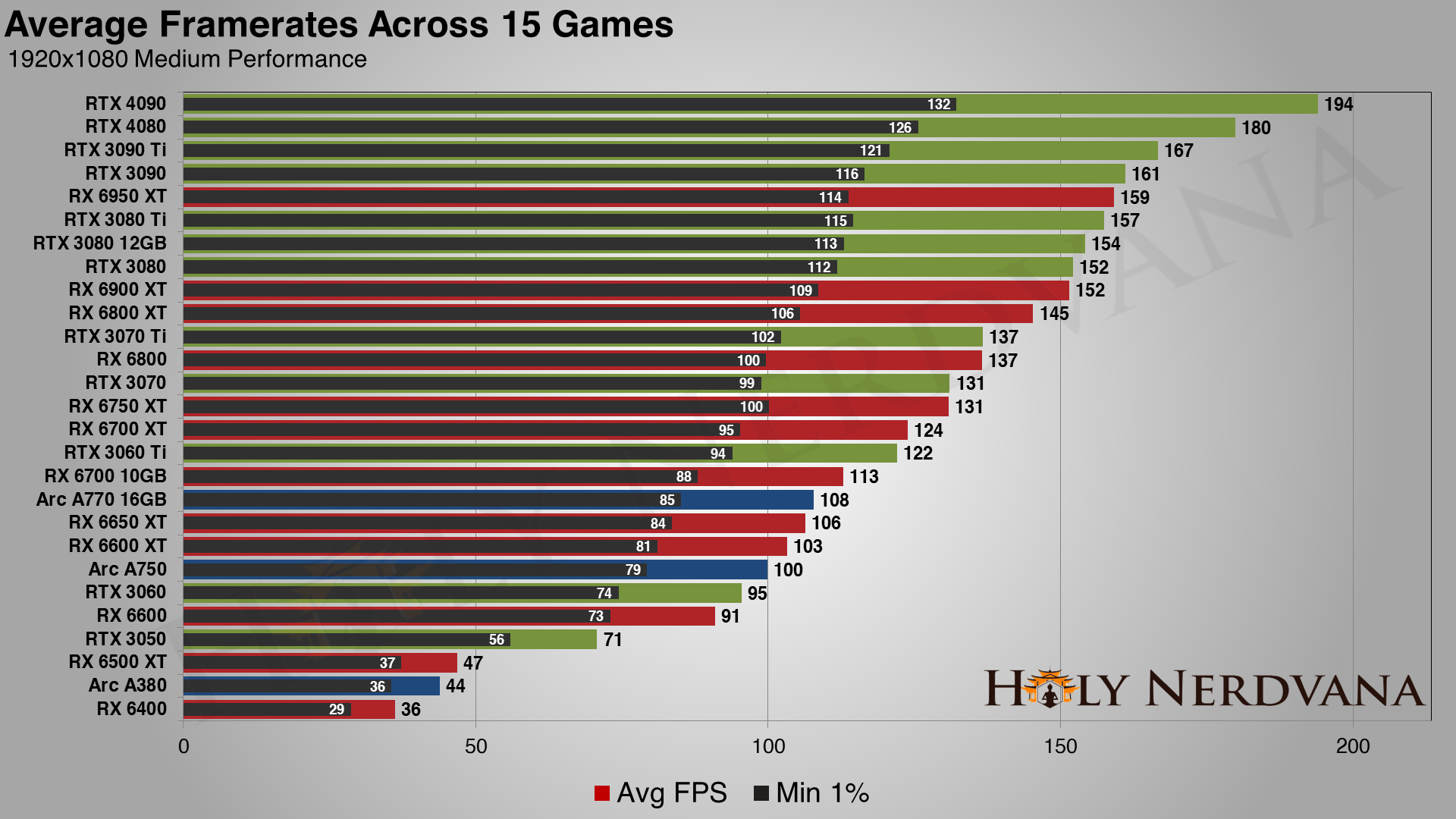

Performance Charts

If you want a slightly different view of the performance rankings, here are the four charts — this time without trying to smush everything into a single composite score. So instead of looking at average and 1% lows "combined," you're getting the pure, unadulterated performance data.

Looking Forward to More Ada and RDNA 3

Nvidia's next generation Ada Lovelace architecture (RTX 40-series) and AMD's next generation RDNA 3 architecture (RX 7000-series) are slated to arrive before the end of the year, with the RTX 4080 and 4090 now launched and the AMD 7900 series coming December 13. While I could argue that you should just wait for the new parts to arrive, there's little reason to expect great prices — the RTX 4090 and now RTX 4080 sold out within hours at most and are now mostly sitting at scalper prices. Again. But I don't expect the high prices to remain the norm for very long, as there's no longer profitable GPU mining to support outrageous prices.

If you already have a decent graphics card, there's no rush to upgrade today. Also keep in mind that the wafer costs on the upcoming architectures are going to be significantly higher, and prices were paid in advance, many months ago — when GPU prices were still far higher. 4nm/5nm class GPUs will simply be inherently more expensive to make, and AMD and Nvidia will want to try and keep higher profit margins.

Beyond the rest of 2022, I do expect to see RTX 4070 Ti and lower tier Ada Lovelace GPUs start showing up in early 2023. AMD will also follow up the RX 7900 series with various other 7000-series parts, with probably at least two 7800-class cards in early 2023. The mainstream and budget cards won't come for a while, though.

.png)

.png)

.png)